Introduction

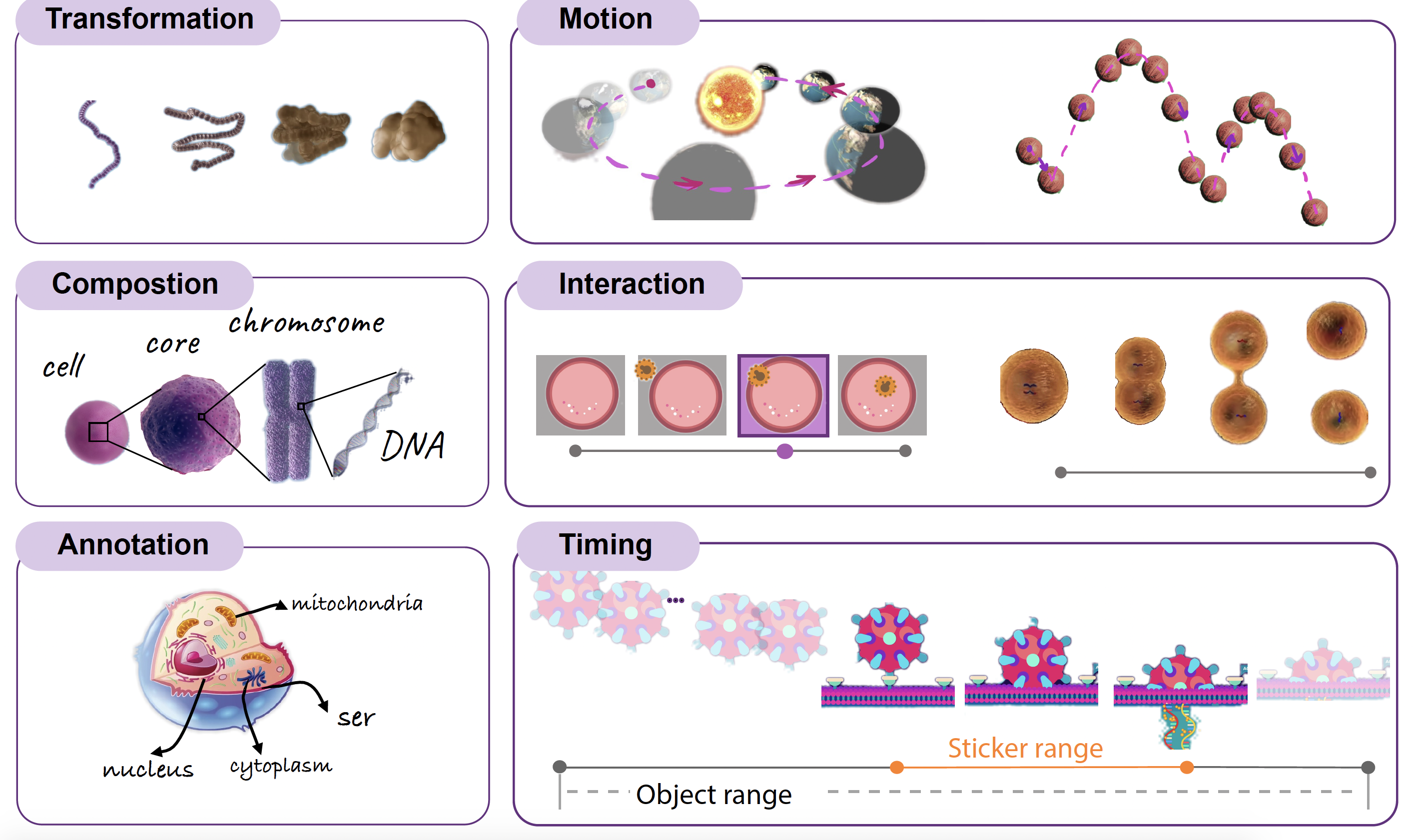

Videos are an effective tool for knowledge sharing. Unlike linear text in which learners need to mentally connect concepts one line at a time, videos offer an integrated graphic representation of objects and relationships over space and time. For instance, students can watch different transformations throughout the metamorphosis of a butterfly.

Such graphical representations have been demonstrated to support dual coding and improve recall. These representations are also more aligned with the representation used in the video. That is, capturing a graphical image (e.g., of a cell in a video of mitosis) in the notes that corresponds to the representation in the video can enhance retention and provide a useful study tool.

However, a challenge is that learners can find it difficult to take notes from video content. To engage in effective note-taking, they need to be able to recruit individual objects (e.g., sun) from videos, write down their understanding about the relationships between those objects, and correctly record event sequences over time, etc. Current practices require repeatedly pausing and rewinding videos, taking static screenshots of individual video frames, and manually transcribe narration. All of this increases the time and effort required for note-taking and makes learning from videos less effective.

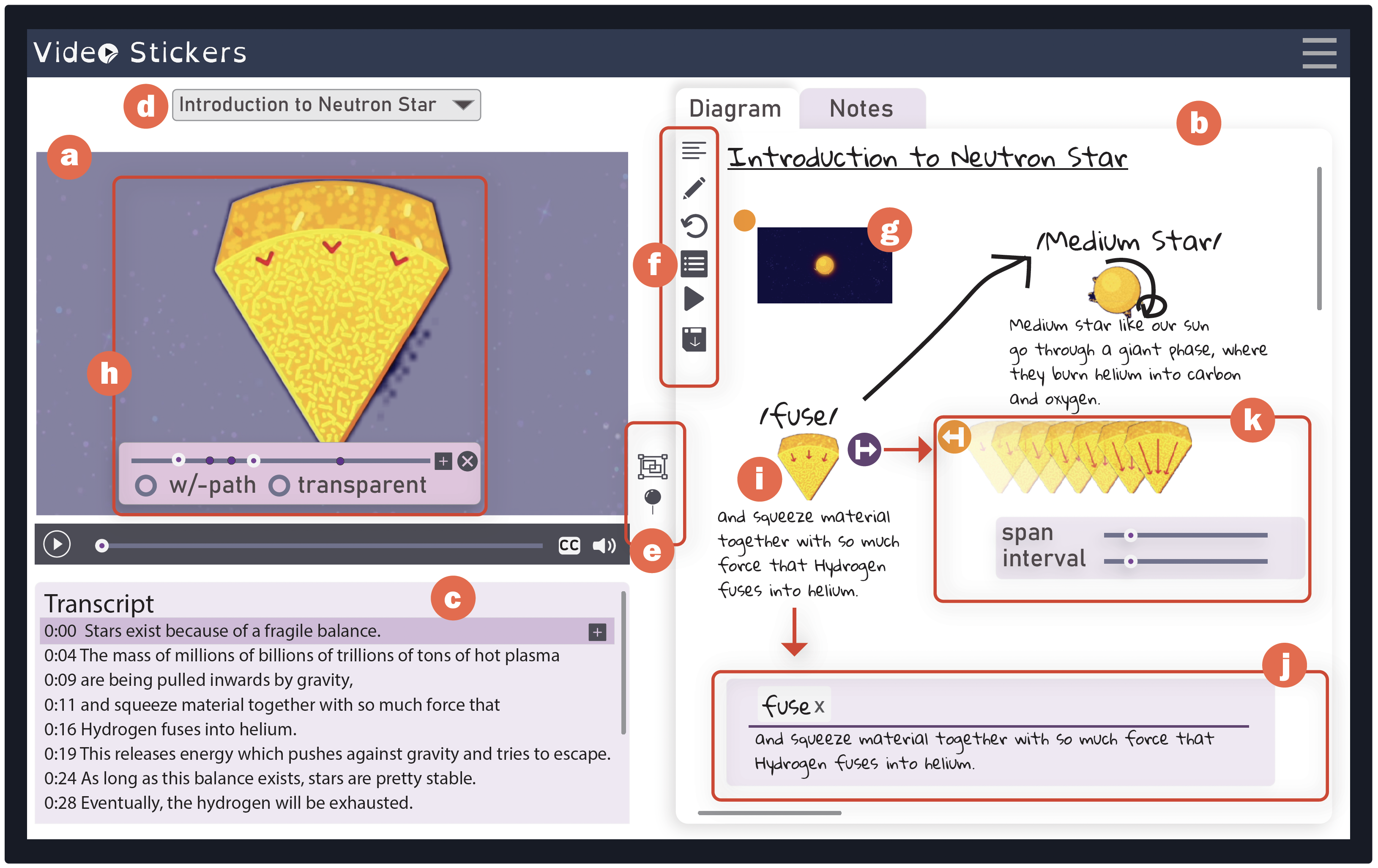

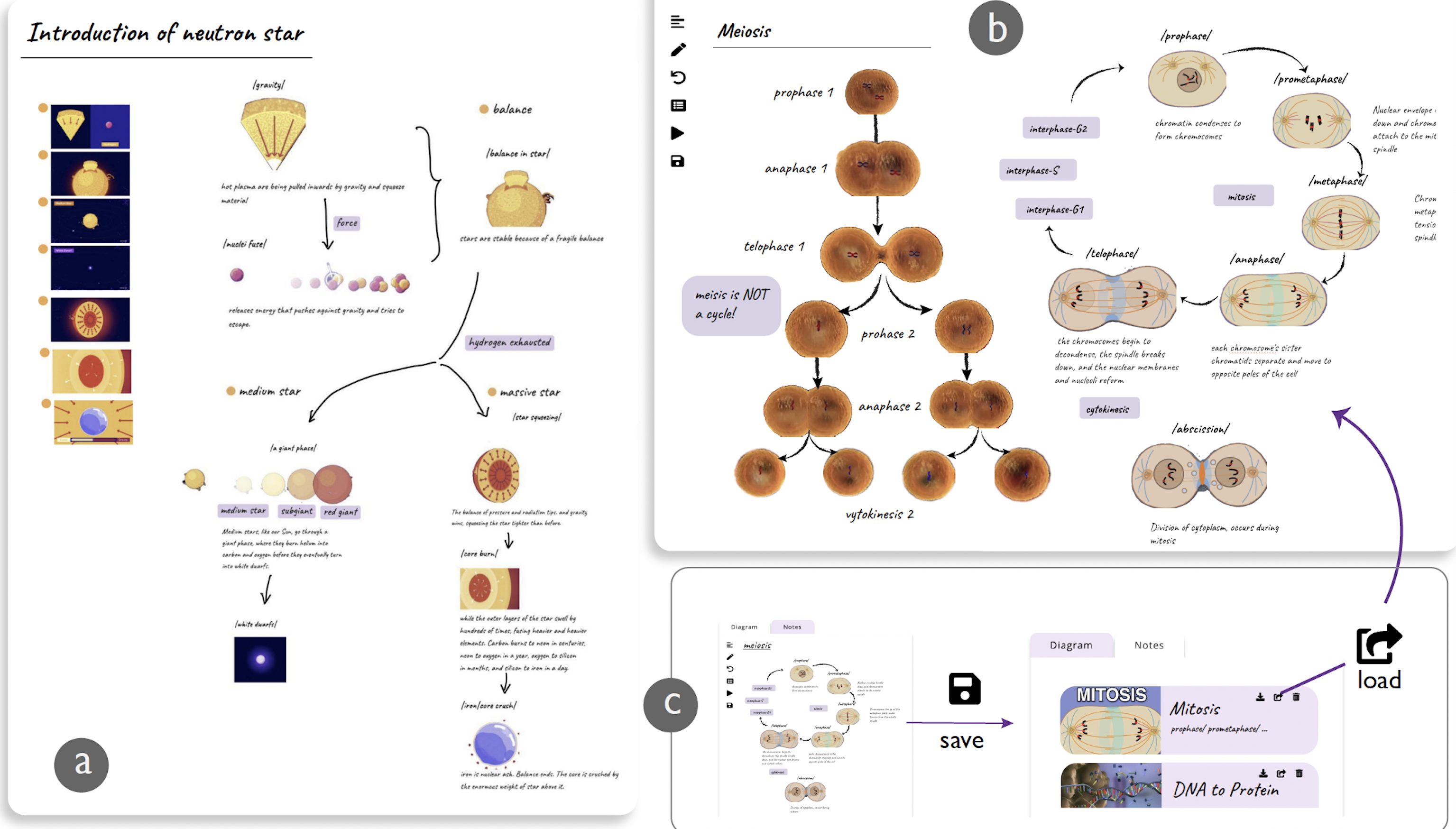

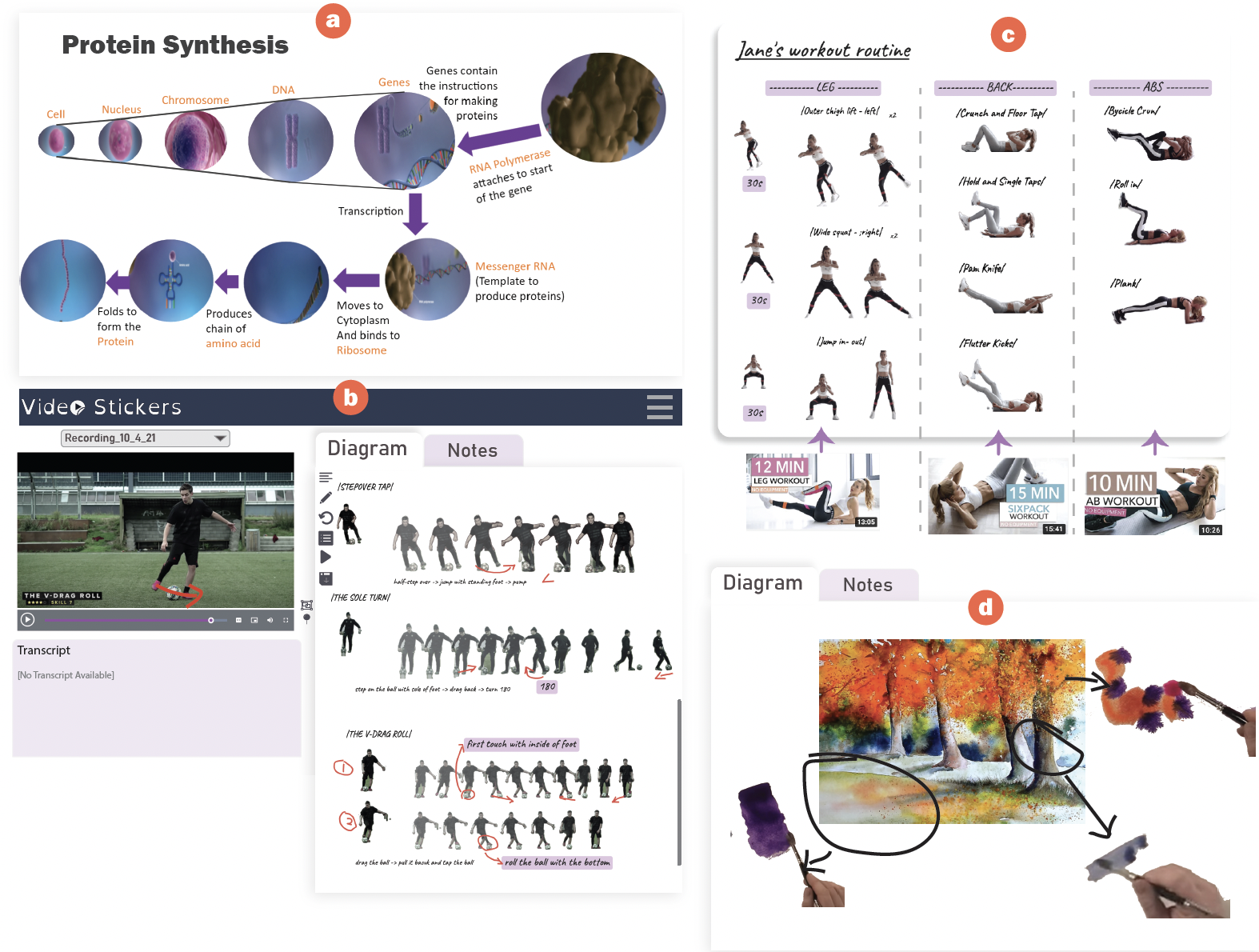

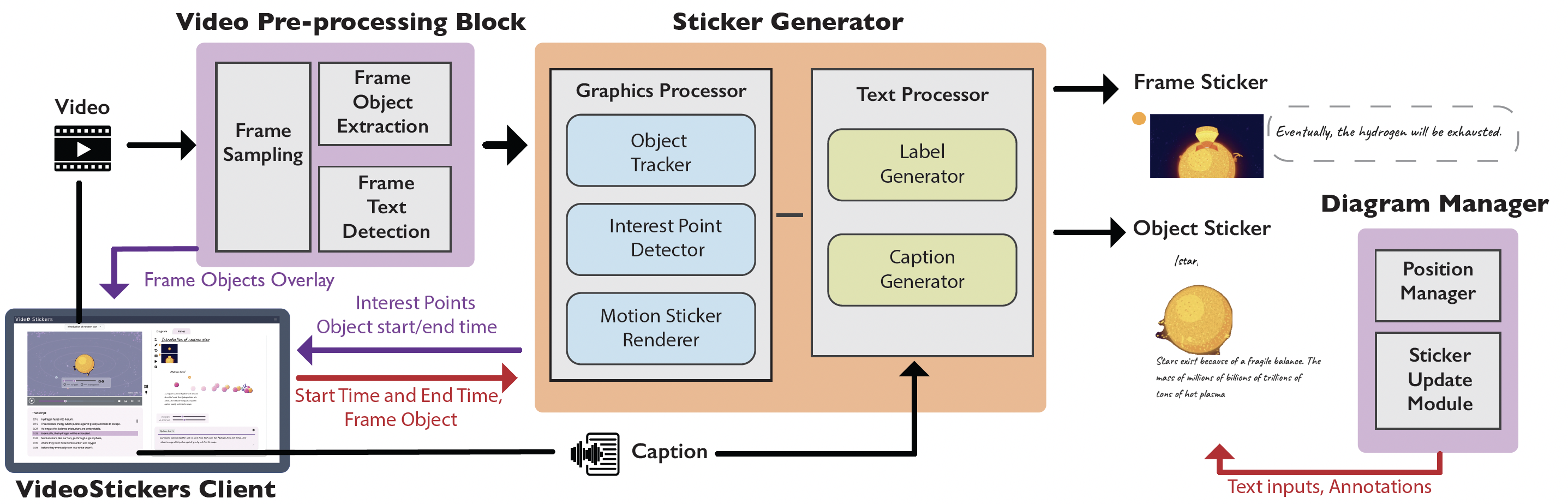

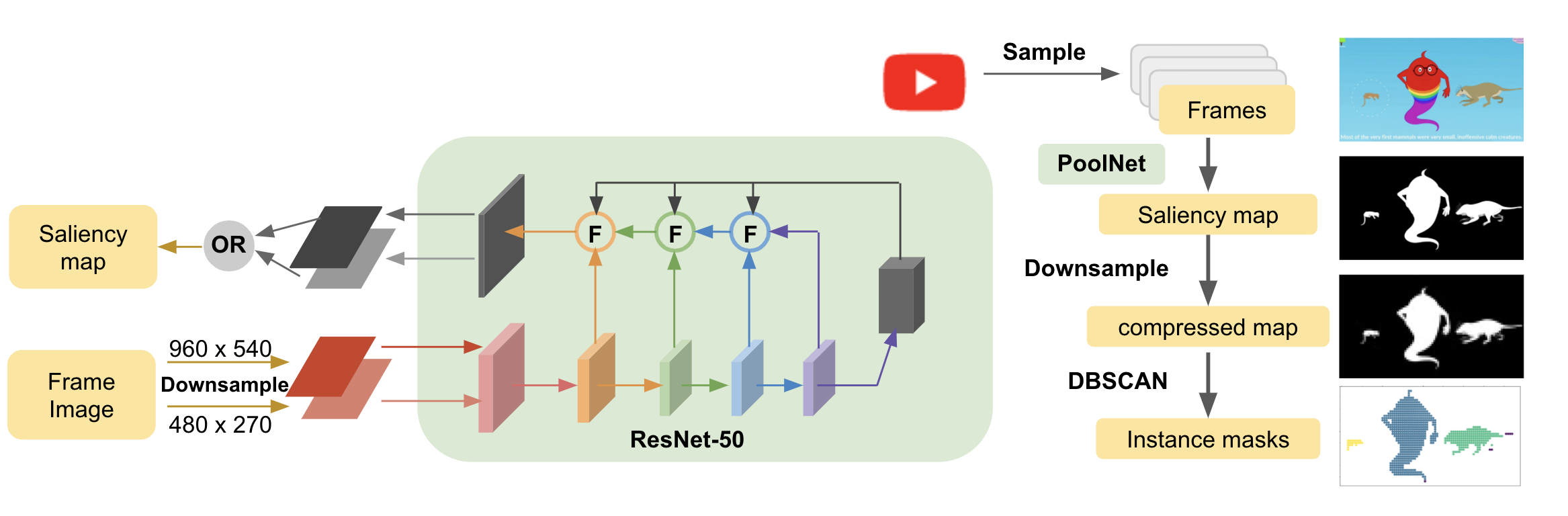

We propose VideoStickers , a tool designed to support visual note-taking by extracting expressive content and narratives from videos as stickers.

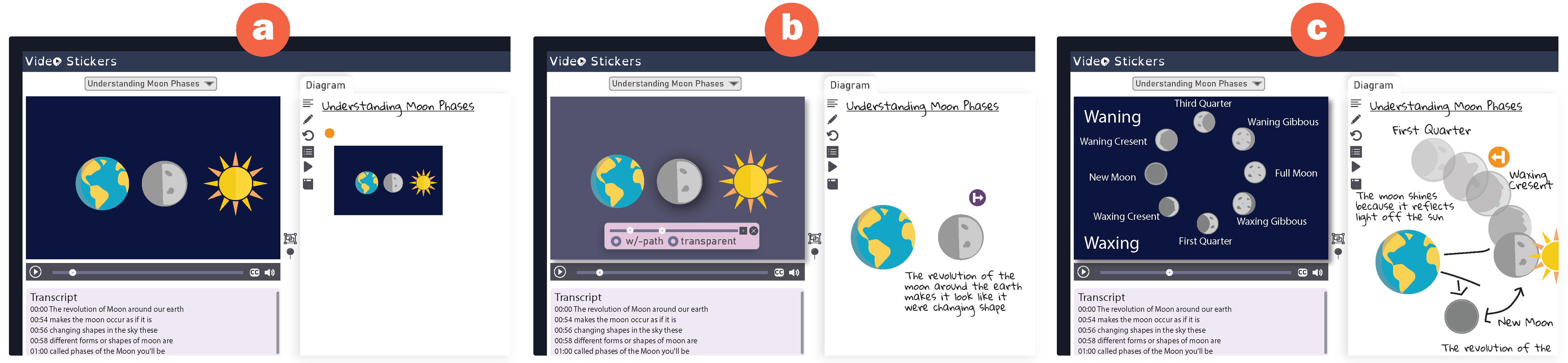

Note-taking using

VideoStickers: While watching the video about Phases of the Moon, the viewer

(a) first captures the video frame for 'new-moon' as a frame sticker. Later the viewer

(b) extracts frame objects for Earth and Moon as object stickers, and

(c) expands the object sticker for the revolution of the moon around the earth and annotates key phases.